Google GCP: The Simplest Load Balancing Cluster with Failover on Windows and Linux

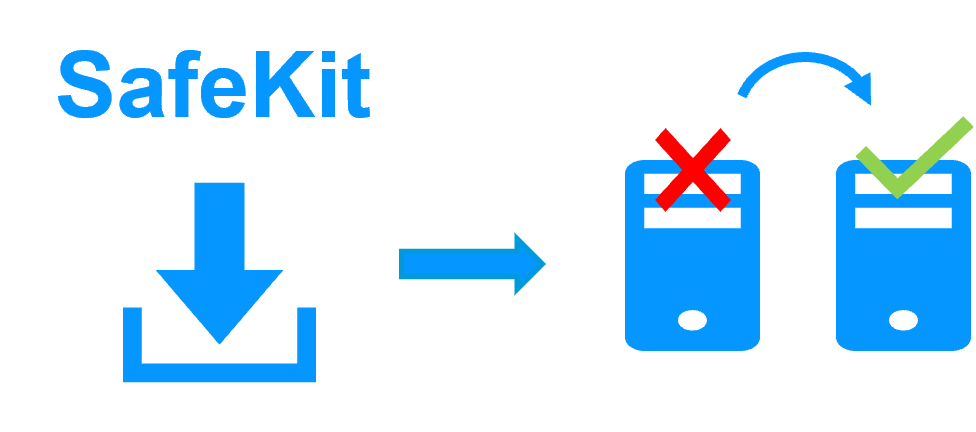

Evidian SafeKit

The solution in Google GCP

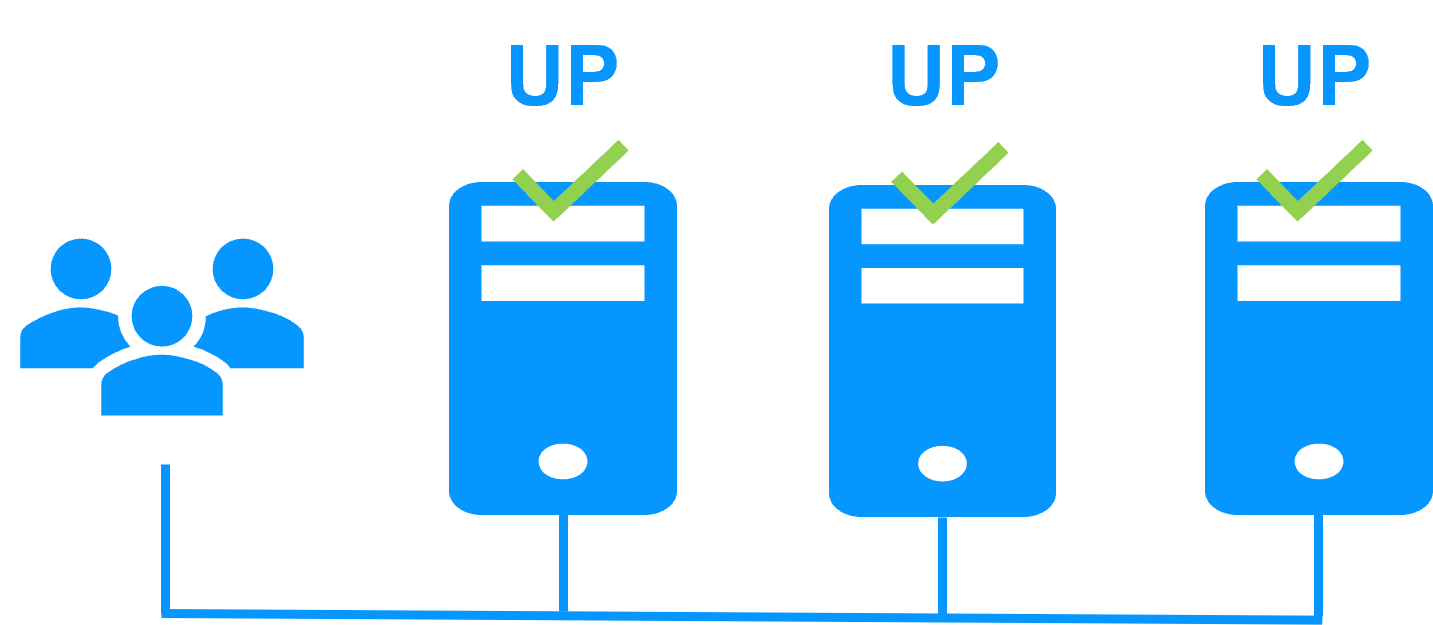

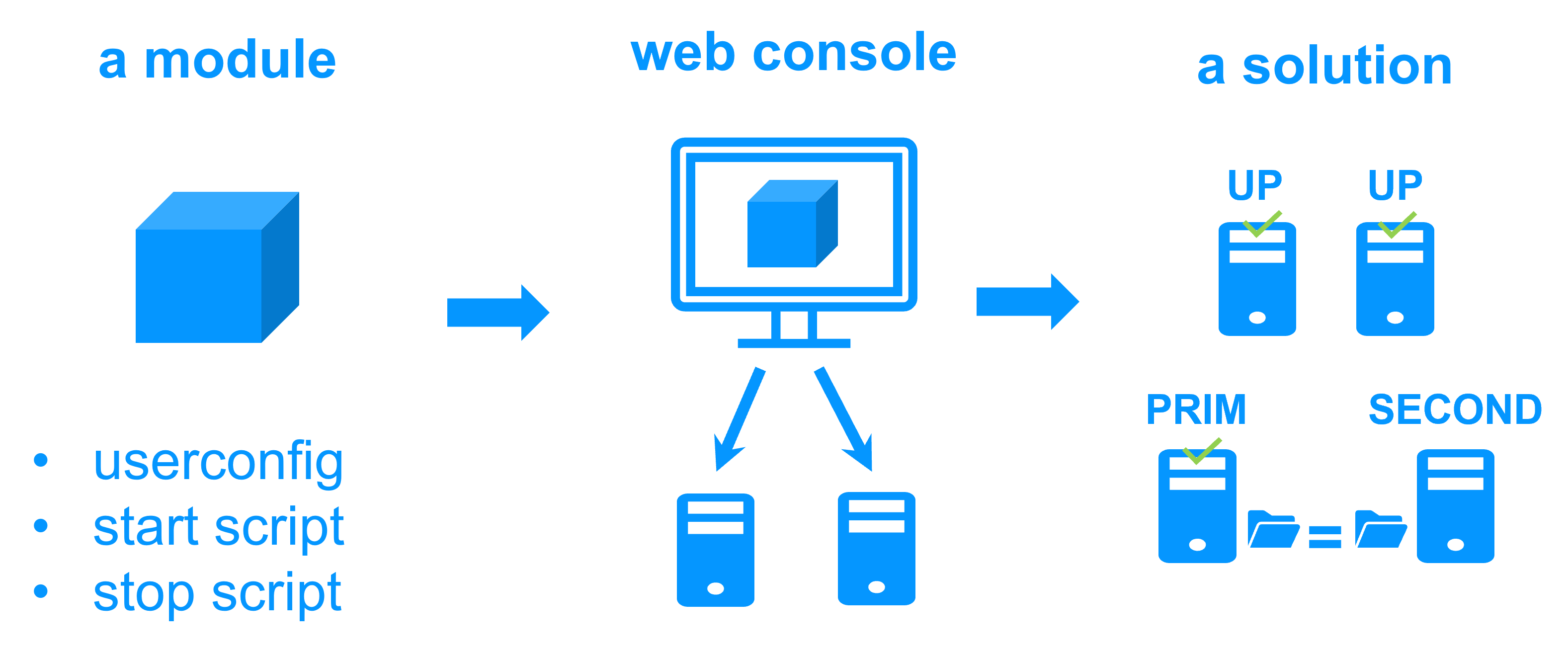

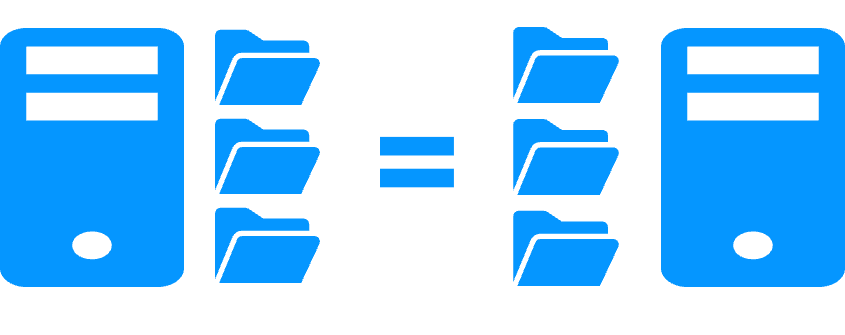

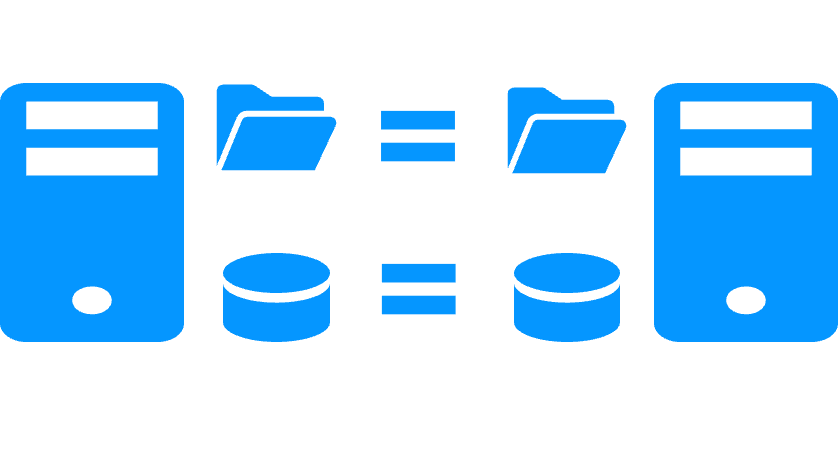

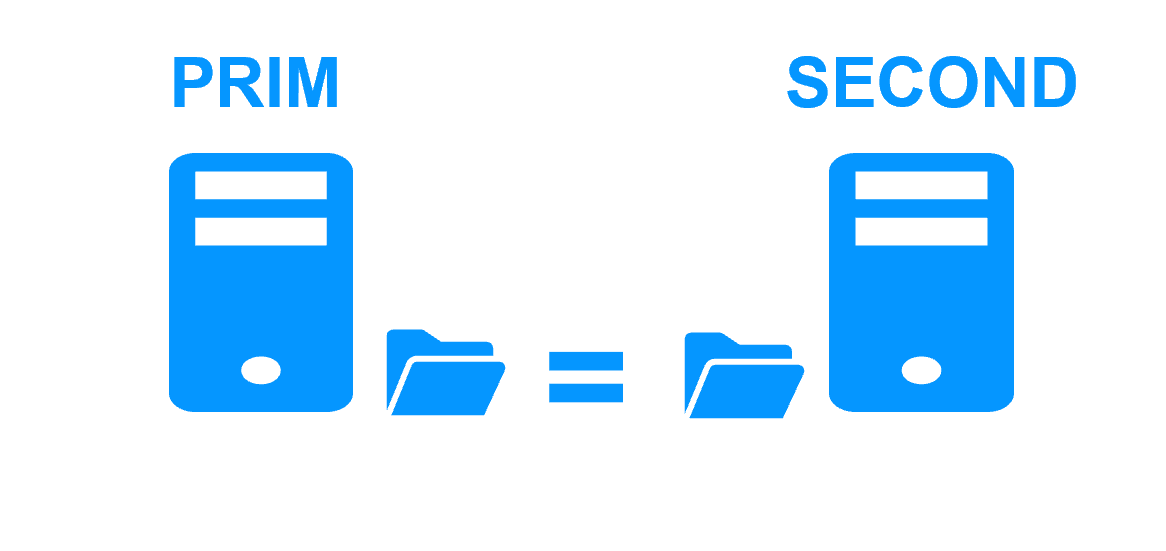

Evidian SafeKit brings load balancing and failover in Google GCP between two Windows or Linux redundant servers or more.

This article explains how to implement quickly a Google GCP cluster without specific skills.

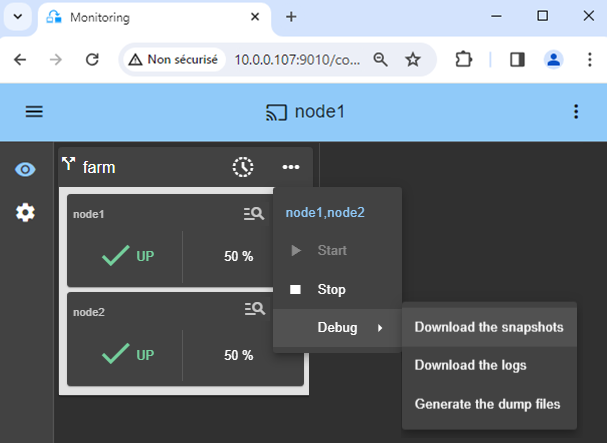

A generic product

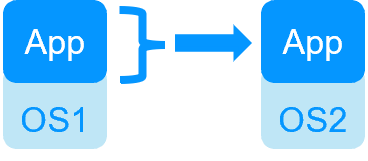

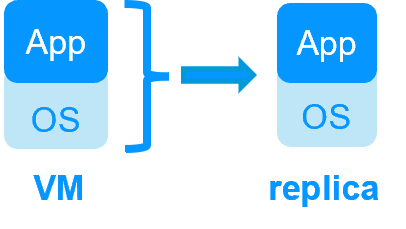

Note that SafeKit is a generic product on Windows and Linux.

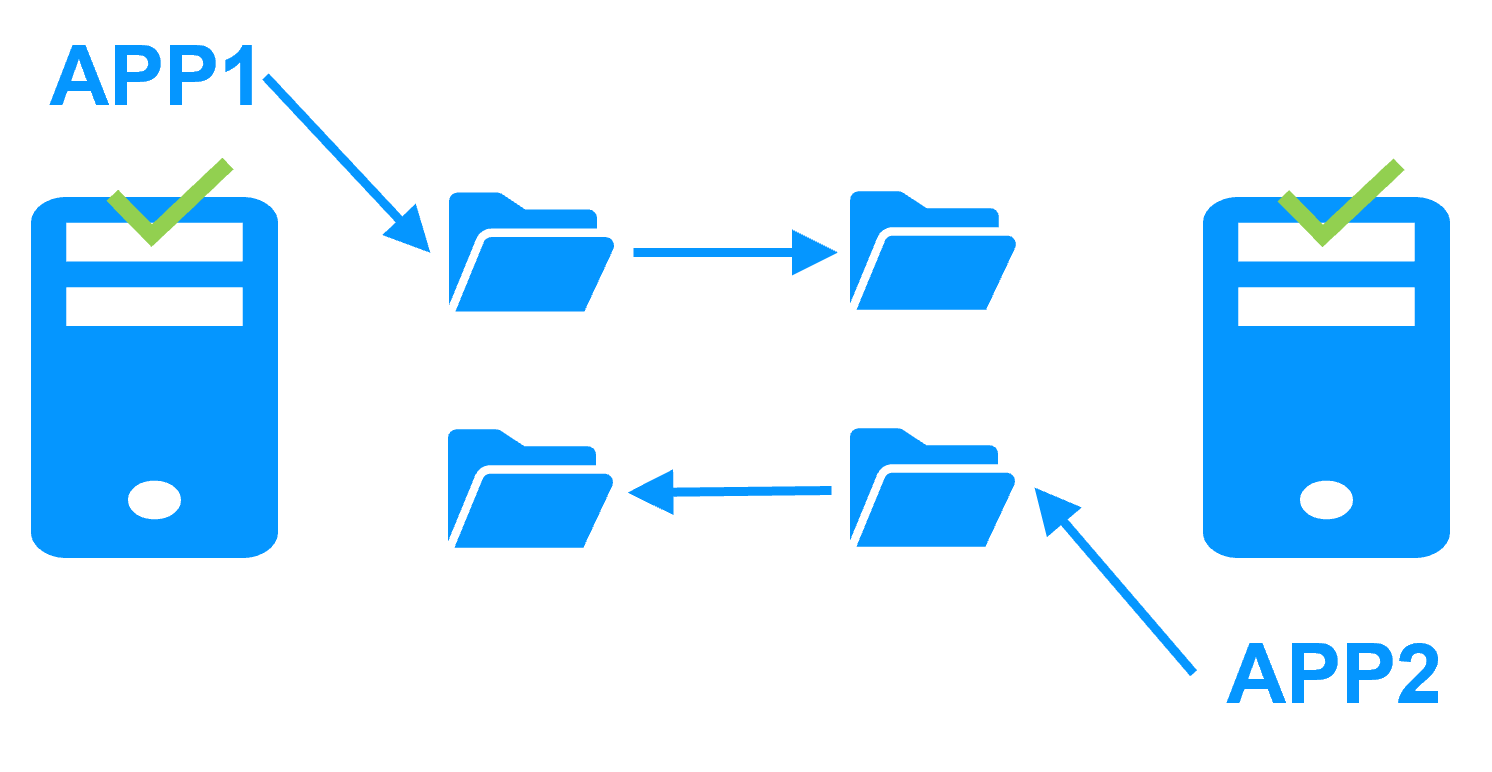

You can implement with the same product real-time replication and failover of any file directory and service, database, complete Hyper-V or KVM virtual machines, Docker, Kubernetes , Cloud applications.

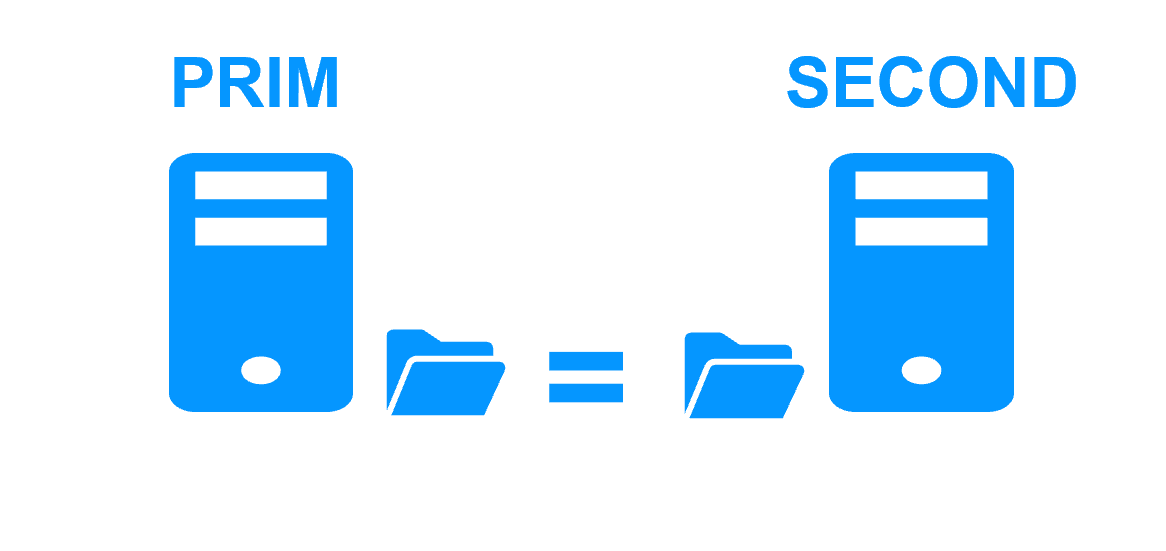

Architecture

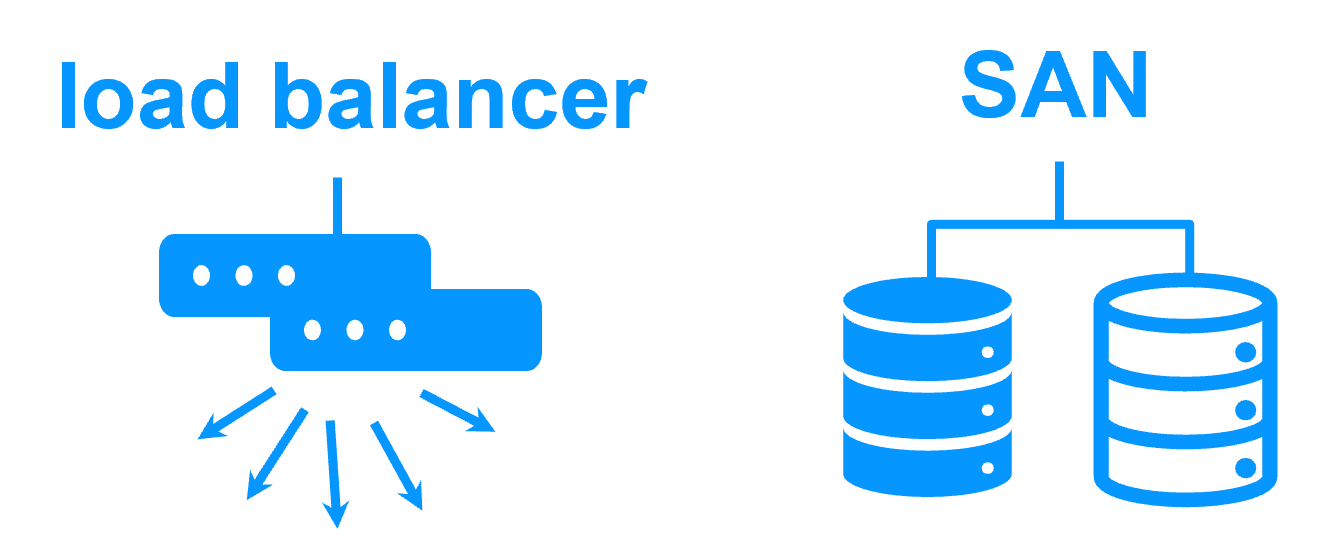

How it works in Google GCP?

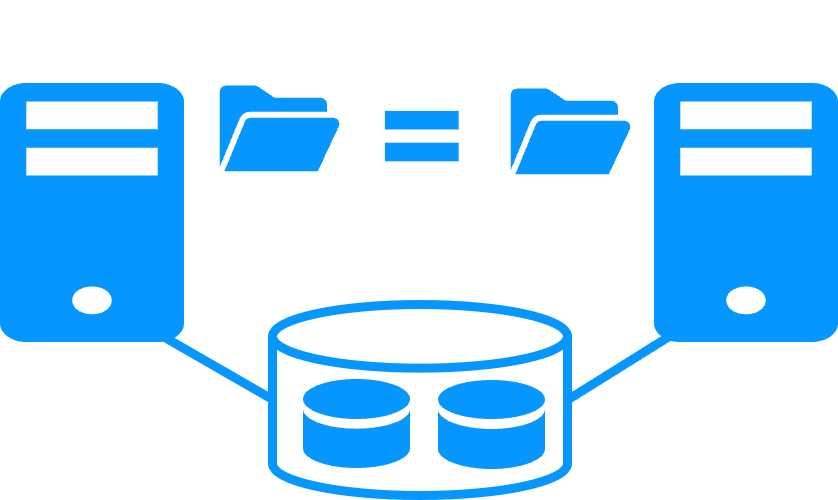

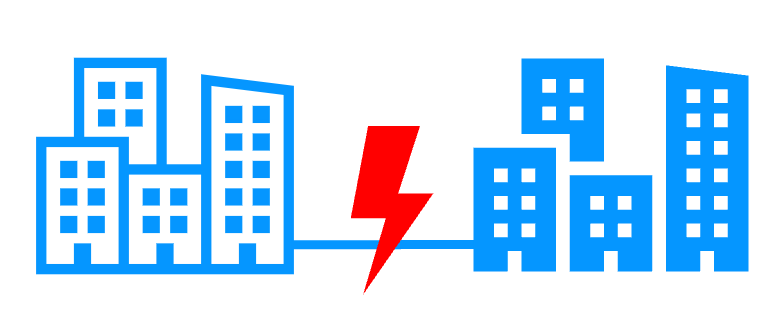

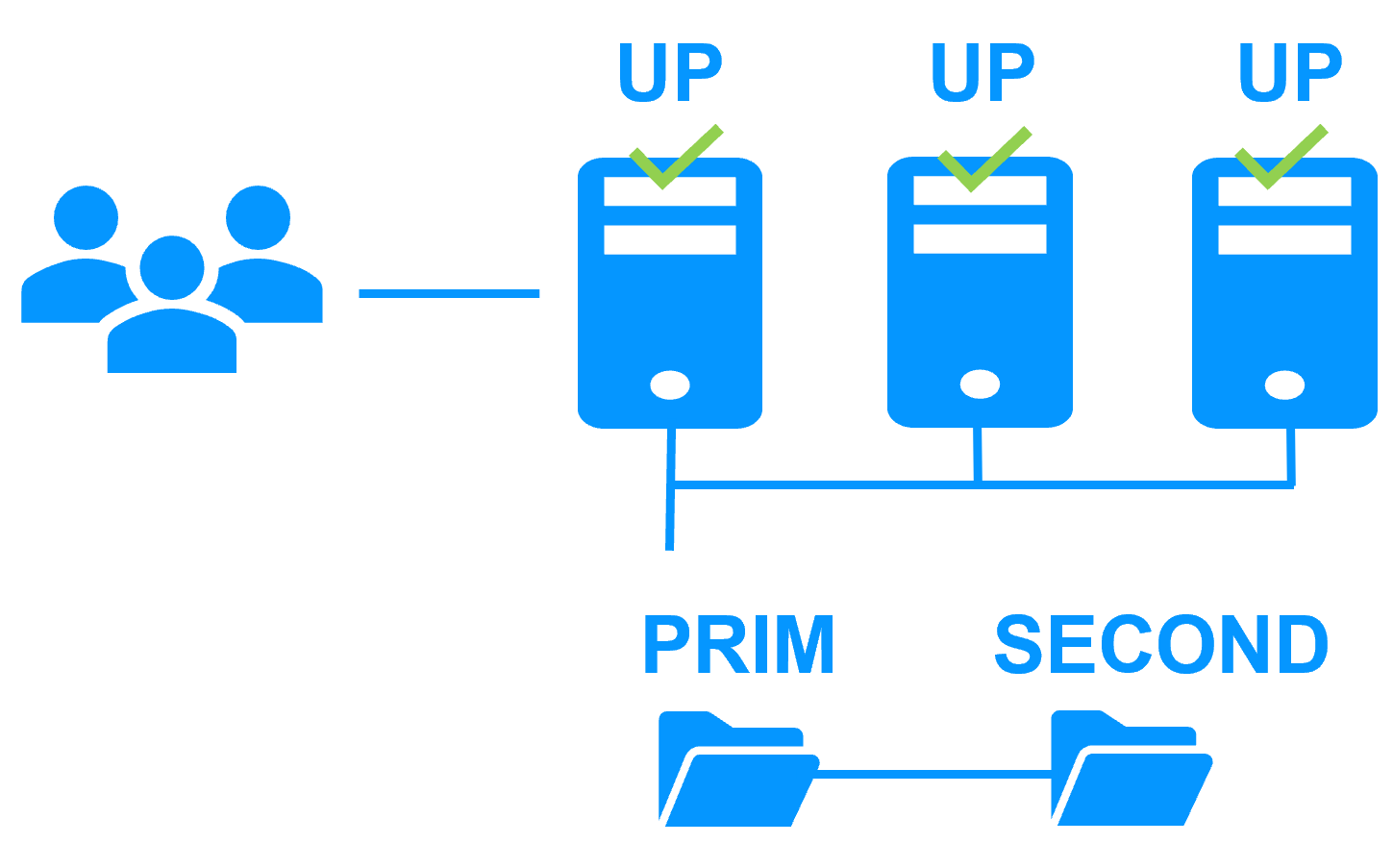

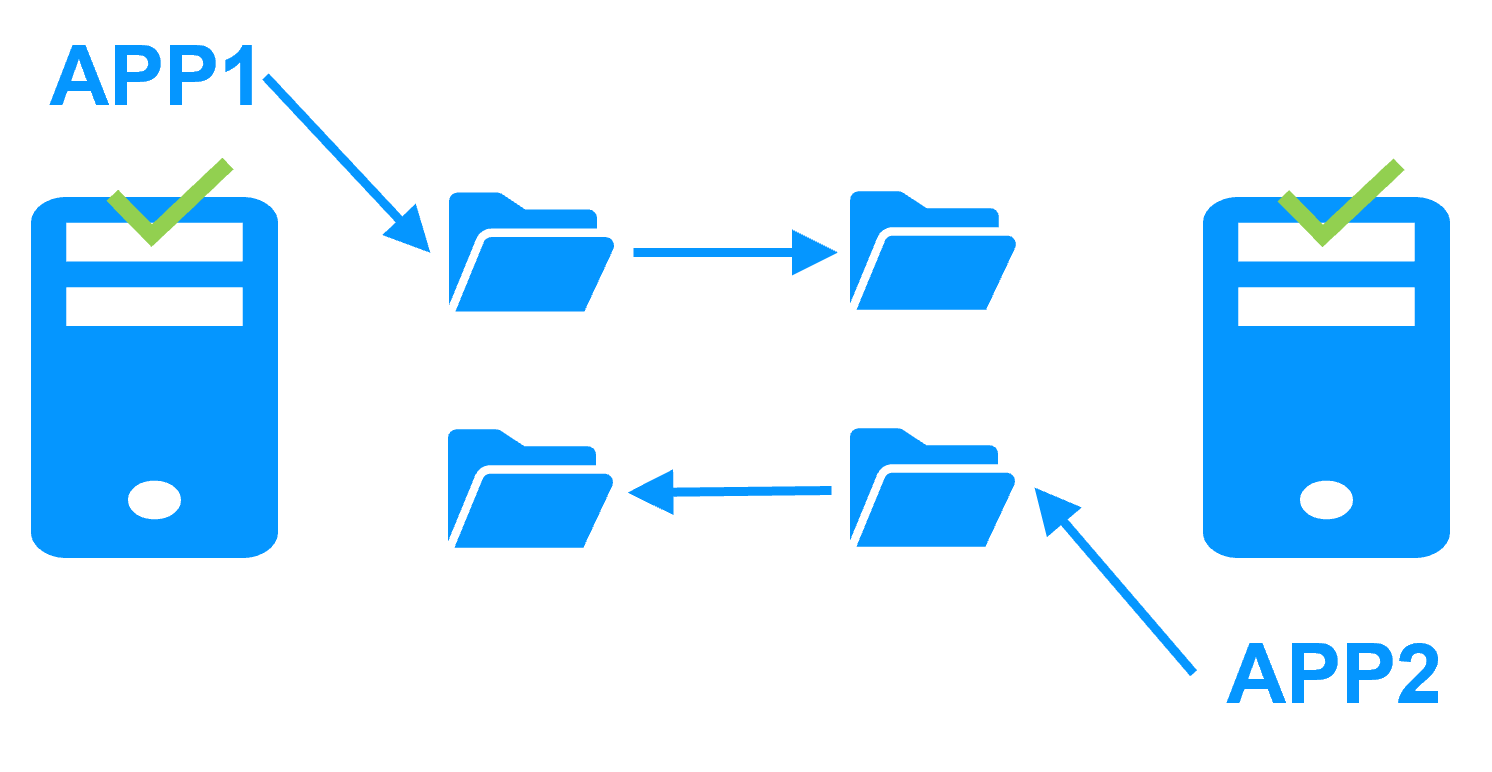

- The servers are running in different availability zones.

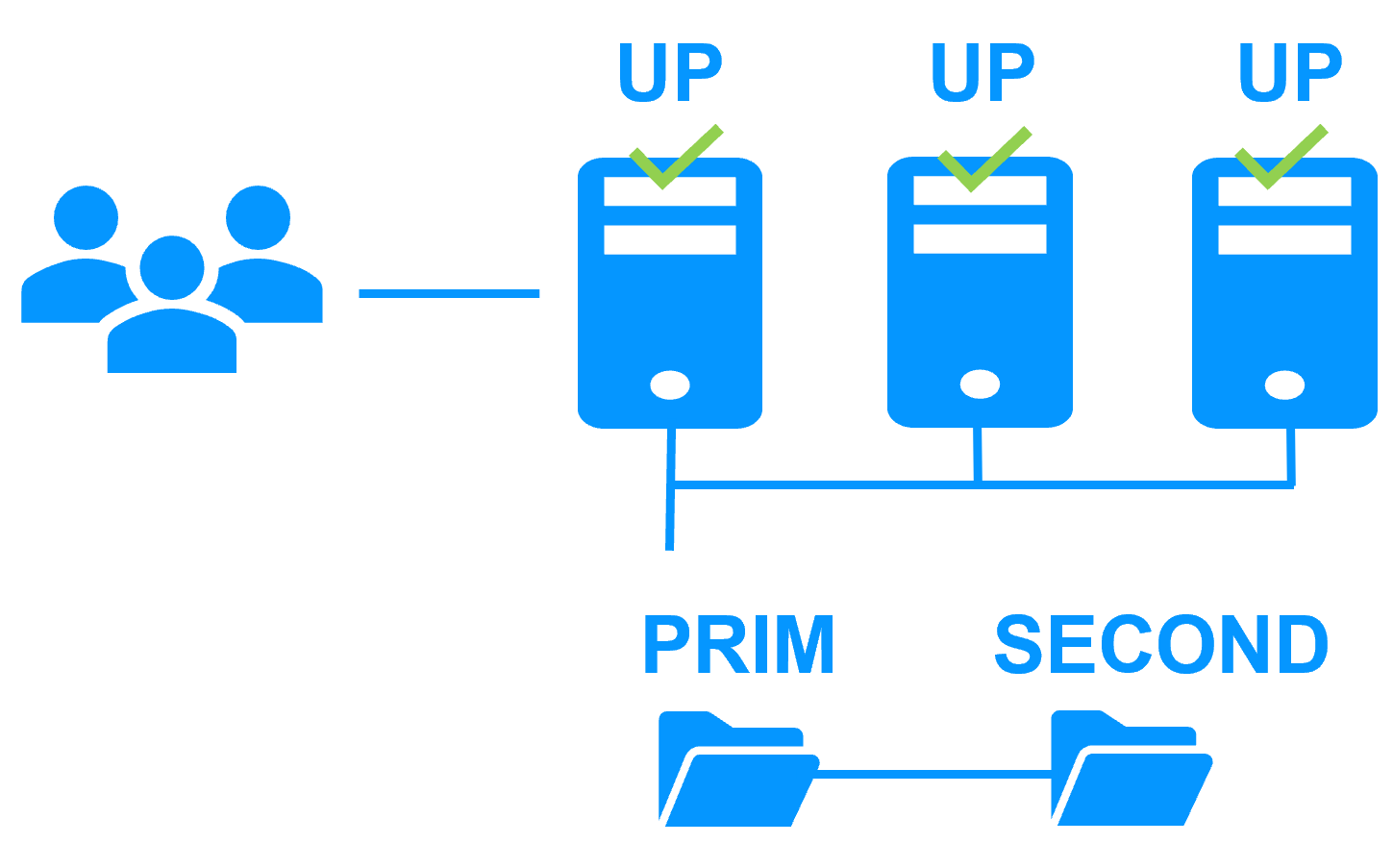

- The critical application is running in all servers of the farm.

- Users are connected to a virtual IP address which is configured in the Google GCP load balancer.

- SafeKit provides a generic health check for the load balancer.

When the farm module is stopped in a server, the health check returns NOK to the load balancer which stops the load balancing of requests to the server.

The same behavior happens when there is a hardware failure. - In each server, SafeKit monitors the critical application with process checkers and custom checkers.

- SafeKit restarts automatically the critical application in a server when there is a software failure thanks to restart scripts.

- A connector for the SafeKit web console is installed in each server.

Thus, the load balancing cluster can be managed in a very simple way to avoid human errors.

Partners, the success with SafeKit

This platform agnostic solution is ideal for a partner reselling a critical application and who wants to provide a redundancy and high availability option easy to deploy to many customers.

With many references in many countries won by partners, SafeKit has proven to be the easiest solution to implement for redundancy and high availability of building management, video management, access control, SCADA software...

Configuration of the Google GCP load balancer

The load balancer must be configured with a virtual IP address.

And the load balancer must be configured to periodically send health packets to nodes.

For that, SafeKit provides a health check which runs inside the nodes and which

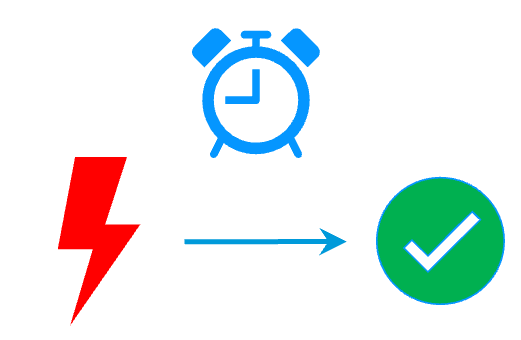

- returns OK when the farm module state is UP (green)

- returns NOT FOUND in all other states

You must configure the Google GCP load balancer with:

- HTTP protocol

- port 9010, the SafeKit web server port

- URL /var/modules/farm/ready.txt (if farm is the module name that you will deploy later)

For more information, see the configuration of the Google GCP load balancer.

Do not configure a virtual IP address and load balancing rules at step 4 in the step by step configuration below. The virtual IP address and load balancing rules are already set in the Google GCP load balancer. Setting a virtual IP and load balancing rules at step 4 is useful for on-premise configuration only.

Configuration of the Google GCP network security

The network security must be configured to enable communications for the following protocols and ports:

- UDP - 4800 for the safeadmin service (between SafeKit nodes)

- TCP – 9010 for the load-balancer health check and for the SafeKit web console running in the http mode

- TCP – 9001 to configure the https mode for the console

- TCP – 9453 for the SafeKit web console running in https mode

Package installation on Windows

-

Download the free version of SafeKit on 2 Windows nodes.

Note: the free version includes all SafeKit features. At the end of the trial, you can activate permanent license keys without uninstalling the package.

-

To open the Windows firewall, on both nodes start a powershell as administrator, and type

c:/safekit/private/bin/firewallcfg add -

To initialize the password for the default admin user of the web console, on both nodes start a powershell as administrator, and type

c:/safekit/private/bin/webservercfg.ps1 -passwd pwd- Use aphanumeric characters for the password (no special characters).

- pwd must be the same on both nodes.

-

Exclude from antivirus scans C:\safekit\ (the default installation directory) and all replicated folders that you are going to define.

Antiviruses may face detection challenges with SafeKit due to its close integration with the OS, virtual IP mechanisms, real-time replication and restart of critical services.

Package installation on Linux

-

Install the free version of SafeKit on 2 Linux nodes.

Note: the free trial includes all SafeKit features. At the end of the trial, you can activate permanent license keys without uninstalling the package.

-

After the download of safekit_xx.bin package, execute it to extract the rpm and the safekitinstall script and then execute the safekitinstall script

-

Answer yes to firewall automatic configuration

-

Set the password for the web console and the default user admin.

- Use aphanumeric characters for the password (no special characters).

- The password must be the same on both nodes.

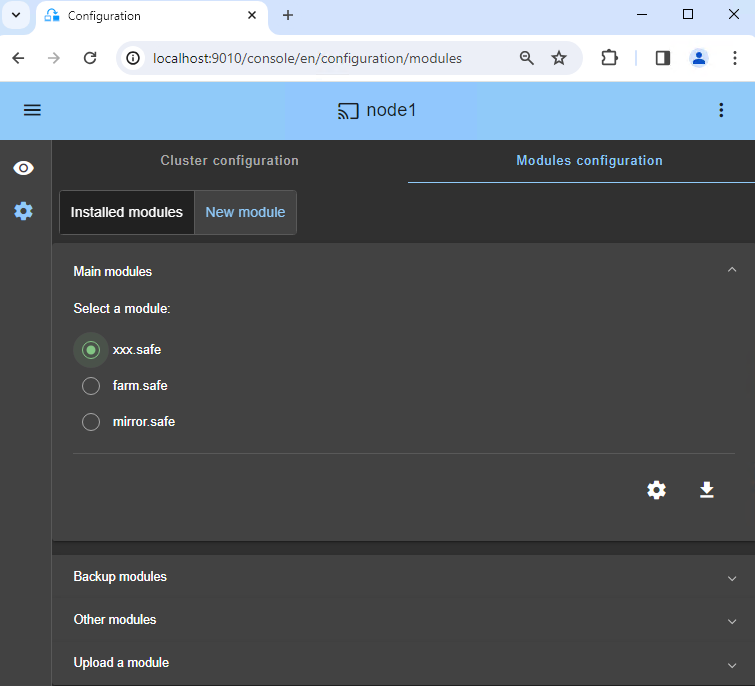

Note: the generic farm.safe module that you are going to configure is delivered inside the package.

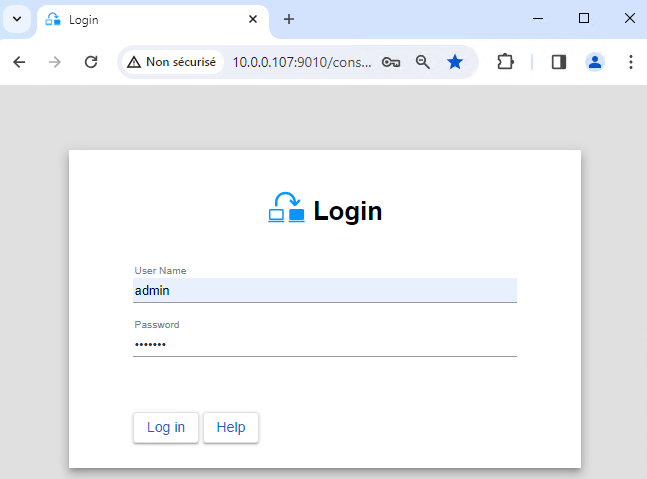

1. Launch the SafeKit console

- Launch the web console in a browser on one cluster node by connecting to

http://localhost:9010. - Enter

adminas user name and the password defined during installation.

You can also run the console in a browser on a workstation external to the cluster.

The configuration of SafeKit is done on both nodes from a single browser.

To secure the web console, see 11. Securing the SafeKit web service in the User's Guide.

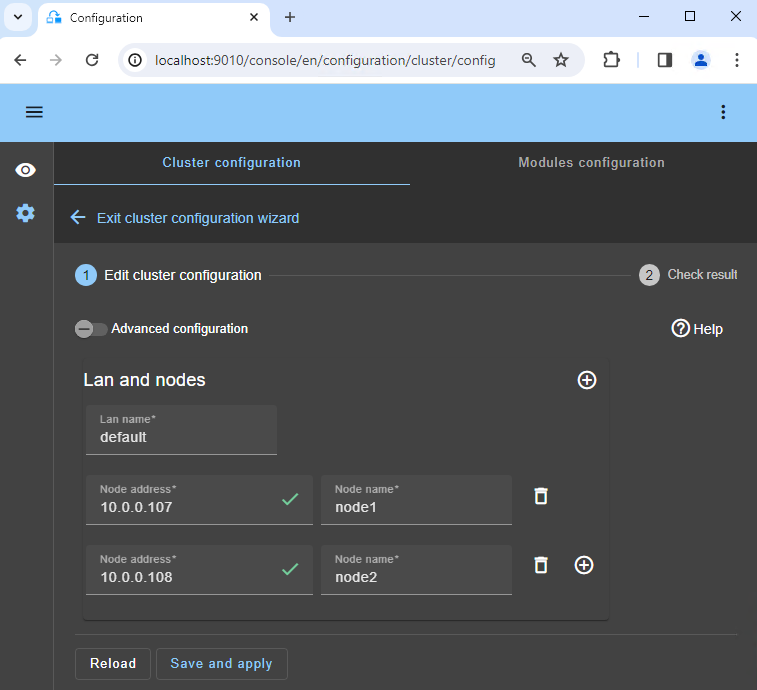

2. Configure node addresses

- Enter the node IP addresses, press the Tab key to check connectivity and fill node names.

- Then, click on

Save and applyto save the configuration.

If either node1 or node2 has a red color, check connectivity of the browser to both nodes and check firewall on both nodes for troubleshooting.

In a farm architecture, you can define more than 2 nodes.

If you want, you can add a new LAN for a second heartbeat.

This operation will place the IP addresses in the cluster.xml file on both nodes (more information in the training with the command line).

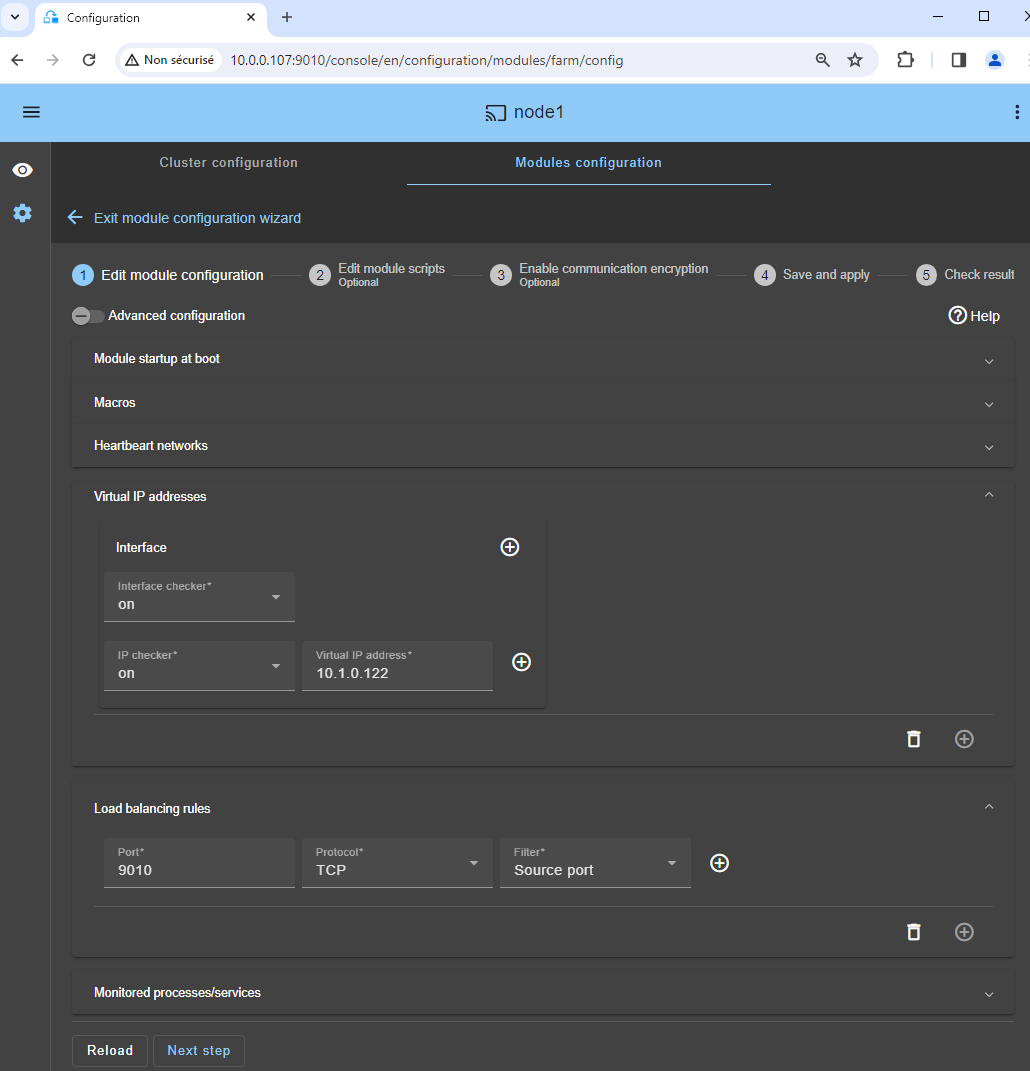

4. Configure the module

- Choose an

Automaticstart of the module at boot without delay inModule startup at boot. - Normally, you have a single

Heartbeatnetwork (except if you add a LAN at step 2). - Enter a

Virtual IP address. A virtual IP address is a standard IP address in the same IP network (same subnet) as the IP addresses of both nodes.

Application clients must be configured with the virtual IP address (or the DNS name associated with the virtual IP address). - Set the service port to load balance (ex.: TCP 80 for HTTP, TCP 443 for HTTPS, TCP 9010 in the example).

- Set the load balancing rule,

Source addressorSource port:- with the source IP address of the client, the same client will be connected to the same node in the farm on multiple TCP sessions and retrieve its context on the node.

- with the source TCP port of the client, the same client will be connected to different nodes in the farm on multiple TCP sessions (without retrieving a context).

- Note that if a process name is displayed in

Monitored processes/services, it will be monitored with a restart action in case of failure. Configuring a wrong process name will cause the module to stop right after its start.

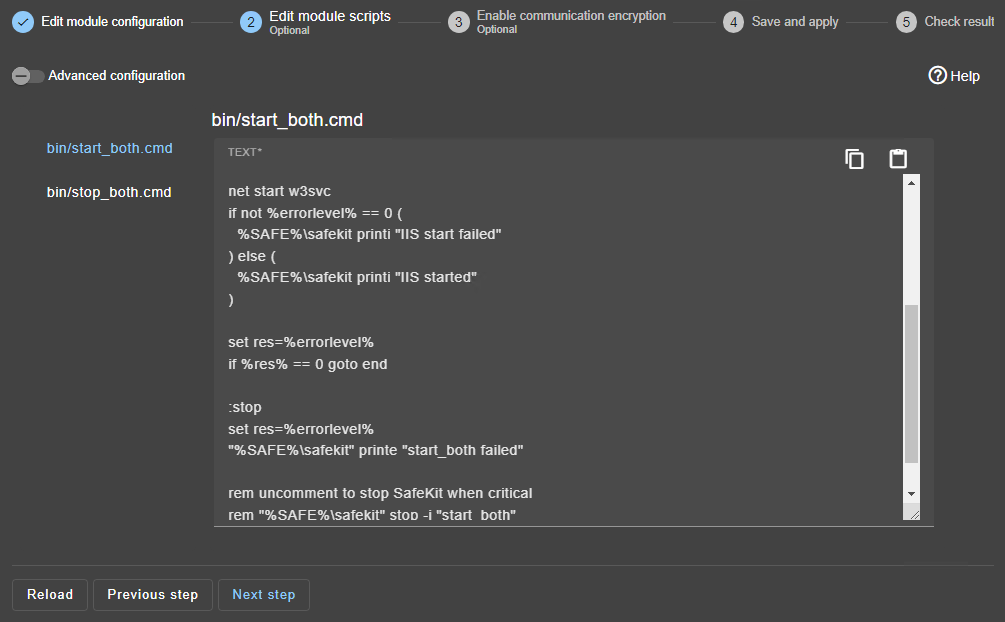

5. Edit scripts (optional)

start_bothandstop_bothmust contain starting and stopping of the application (example provided for Microsoft IIS on the right).- You can add new services in these scripts.

- Check that the names of the services started in these scripts are those installed on both nodes, otherwise modify them in the scripts.

- On Windows and on both nodes, with the Windows services manager, set

Boot Startup Type = Manualfor all the services registered instart_both(SafeKit controls the start of services instart_both).

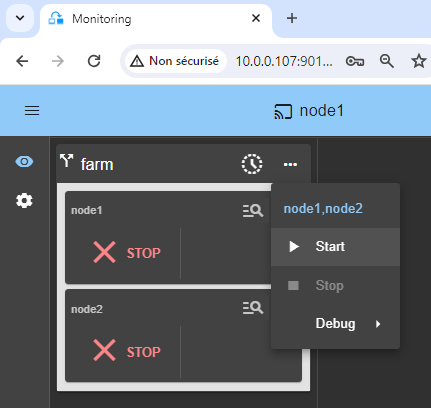

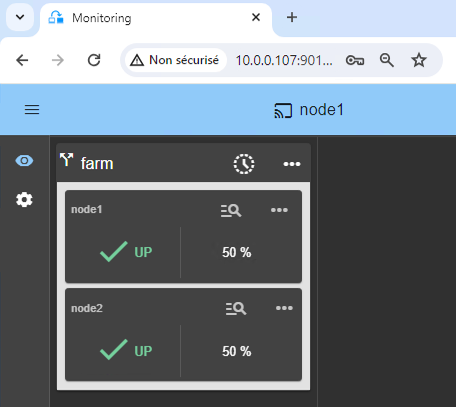

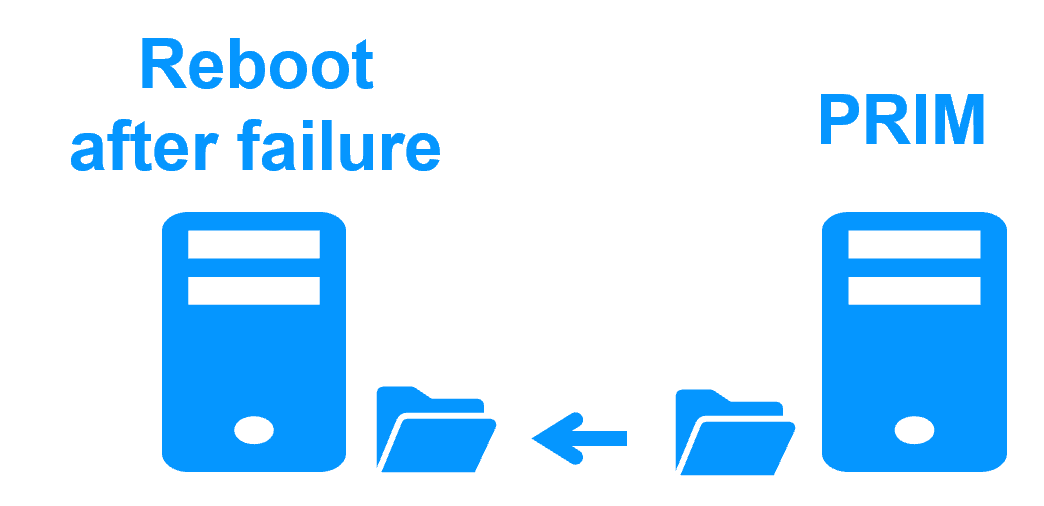

8. Wait for the transition to UP (green) / UP (green)

Node 1 and node 2 should reach the UP (green) state, which means that the start_both script has been executed on node 1 and node 2.

If UP (green) is not reached or if the application is not started, analyze why with the module log of node 1 or node 2.

- click the "log" icon of

node1ornode2to open the module log and look for error messages such as a checker detecting an error and stopping the module. - click on

start_bothin the log: output messages of the script are displayed on the right and errors can be detected such as a service incorrectly started.

9. Testing

SafeKit brings a built-in test in the product:

- Configure a rule for TCP port 9010 with a load balancing on source TCP port.

- Connect an external workstation outside the farm nodes.

- Start a browser on http://virtual-ip:9010/safekit/mosaic.html.

- Stop one UP (green) node by scrolling down its contextual menu and clicking Stop.

- Check that there is no more TCP connections on the stopped node and on the virtual IP address.

You should see a mosaic of colors depending on nodes answering to HTTP requests.

10. Support

- For getting support, take 2 SafeKit

Snapshots(2 .zip files), one for each node. - If you have an account on https://support.evidian.com, upload them in the call desk tool.

Internal files of a SafeKit / Microsoft Azure load balancing cluster with failover

Go to the Advanced Configuration tab, for editing these filesInternal files of the Windows farm.safe module

userconfig.xml on Windows (description in the User's Guide)<!DOCTYPE safe>

<safe>

<service mode="farm" maxloop="3" loop_interval="24">

<!-- Farm topology configuration -->

<!-- Names or IP addresses on the default network are set during initialization in the console -->

<farm>

<lan name="default" />

</farm>

<!-- Software Error Detection Configuration -->

<!-- Replace

* PROCESS_NAME by the name of the process to monitor

-->

<errd polltimer="10">

<proc name="PROCESS_NAME" atleast="1" action="restart" class="both" />

</errd>

<!-- User scripts activation -->

<user nicestoptimeout="300" forcestoptimeout="300" logging="userlog" />

</service>

</safe>

@echo off

rem Script called on all servers for starting applications

rem For logging into SafeKit log use:

rem "%SAFE%\safekit" printi | printe "message"

rem stdout goes into Application log

echo "Running start_both %*"

set res=0

rem Fill with your services start call

set res=%errorlevel%

if %res% == 0 goto end

:stop

set res=%errorlevel%

"%SAFE%\safekit" printe "start_both failed"

rem uncomment to stop SafeKit when critical

rem "%SAFE%\safekit" stop -i "start_both"

:end

@echo off

rem Script called on all servers for stopping application

rem For logging into SafeKit log use:

rem "%SAFE%\safekit" printi | printe "message"

rem ----------------------------------------------------------

rem

rem 2 stop modes:

rem

rem - graceful stop

rem call standard application stop with net stop

rem

rem - force stop (%1=force)

rem kill application's processes

rem

rem ----------------------------------------------------------

rem stdout goes into Application log

echo "Running stop_both %*"

set res=0

rem default: no action on forcestop

if "%1" == "force" goto end

rem Fill with your services stop call

rem If necessary, uncomment to wait for the real stop of services

rem "%SAFEBIN%\sleep" 10

if %res% == 0 goto end

"%SAFE%\safekit" printe "stop_both failed"

:end

Internal files of the Linux farm.safe module

userconfig.xml on Linux (description in the User's Guide)<!DOCTYPE safe>

<safe>

<service mode="farm" maxloop="3" loop_interval="24">

<!-- Farm topology configuration for the membership protocol -->

<!-- Names or IP addresses on the default network are set during initialization in the console -->

<farm>

<lan name="default" />

</farm>

<!-- Software Error Detection Configuration -->

<!-- Replace

* PROCESS_NAME by the name of the process to monitor

-->

<errd polltimer="10">

<proc name="PROCESS_NAME" atleast="1" action="restart" class="both" />

</errd>

<!-- User scripts activation -->

<user nicestoptimeout="300" forcestoptimeout="300" logging="userlog" />

</service>

</safe>

#!/bin/sh

# Script called on the primary server for starting application

# For logging into SafeKit log use:

# $SAFE/safekit printi | printe "message"

# stdout goes into Application log

echo "Running start_both $*"

res=0

# Fill with your application start call

if [ $res -ne 0 ] ; then

$SAFE/safekit printe "start_both failed"

# uncomment to stop SafeKit when critical

# $SAFE/safekit stop -i "start_both"

fi

#!/bin/sh

# Script called on the primary server for stopping application

# For logging into SafeKit log use:

# $SAFE/safekit printi | printe "message"

#----------------------------------------------------------

#

# 2 stop modes:

#

# - graceful stop

# call standard application stop

#

# - force stop ($1=force)

# kill application's processes

#

#----------------------------------------------------------

# stdout goes into Application log

echo "Running stop_both $*"

res=0

# default: no action on forcestop

[ "$1" = "force" ] && exit 0

# Fill with your application stop call

[ $res -ne 0 ] && $SAFE/safekit printe "stop_both failed"

Network load balancing and failover |

|

| Windows farm | Linux farm |

| Generic Windows farm > | Generic Linux farm > |

| Microsoft IIS > | - |

| NGINX > | |

| Apache > | |

| Amazon AWS farm > | |

| Microsoft Azure farm > | |

| Google GCP farm > | |

| Other cloud > | |

Advanced clustering architectures

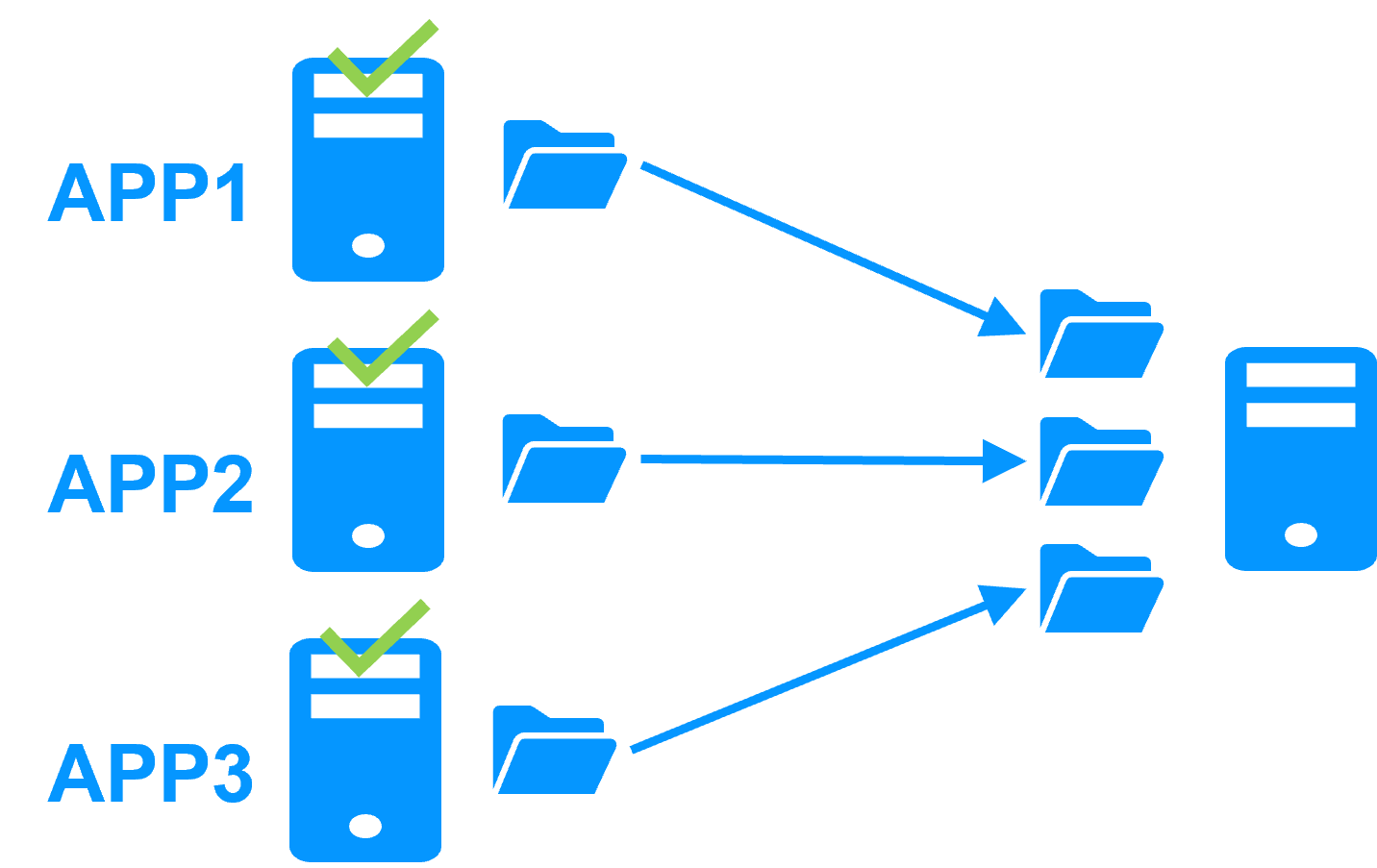

Several modules can be deployed on the same cluster. Thus, advanced clustering architectures can be implemented:

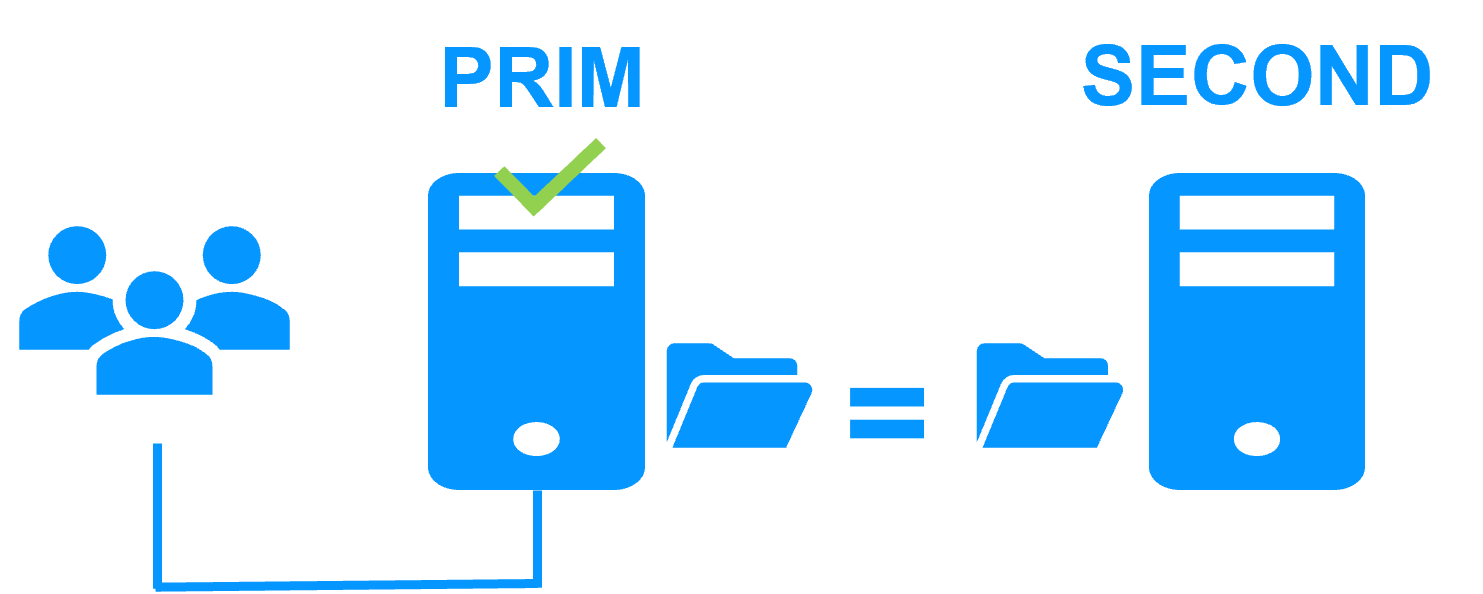

- the farm+mirror cluster built by deploying a farm module and a mirror module on the same cluster,

- the active/active cluster with replication built by deploying several mirror modules on 2 servers,

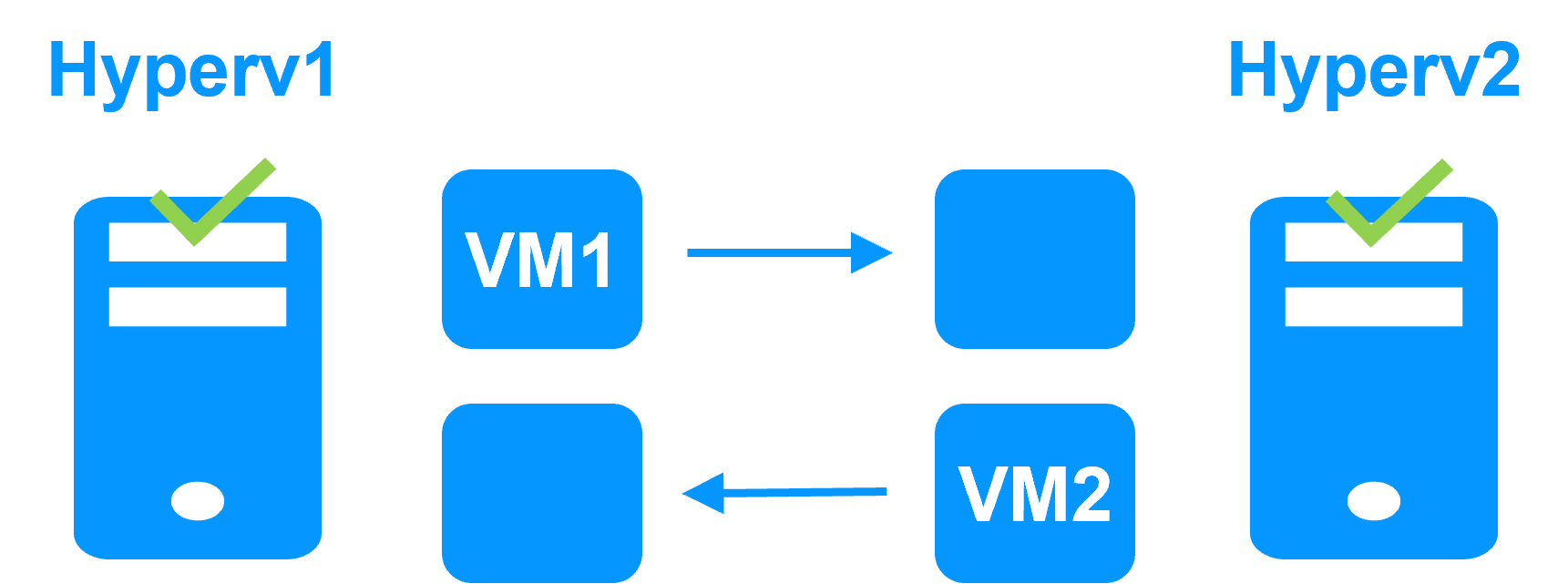

- the Hyper-V cluster or KVM cluster with real-time replication and failover of full virtual machines between 2 active hypervisors,

- the N-1 cluster built by deploying N mirror modules on N+1 servers.

- Demonstration

- Examples of redundancy and high availability solution

- Evidian SafeKit sold in many different countries with Milestone

- 2 solutions: virtual machine cluster or application cluster

- Distinctive advantages

- More information on the web site

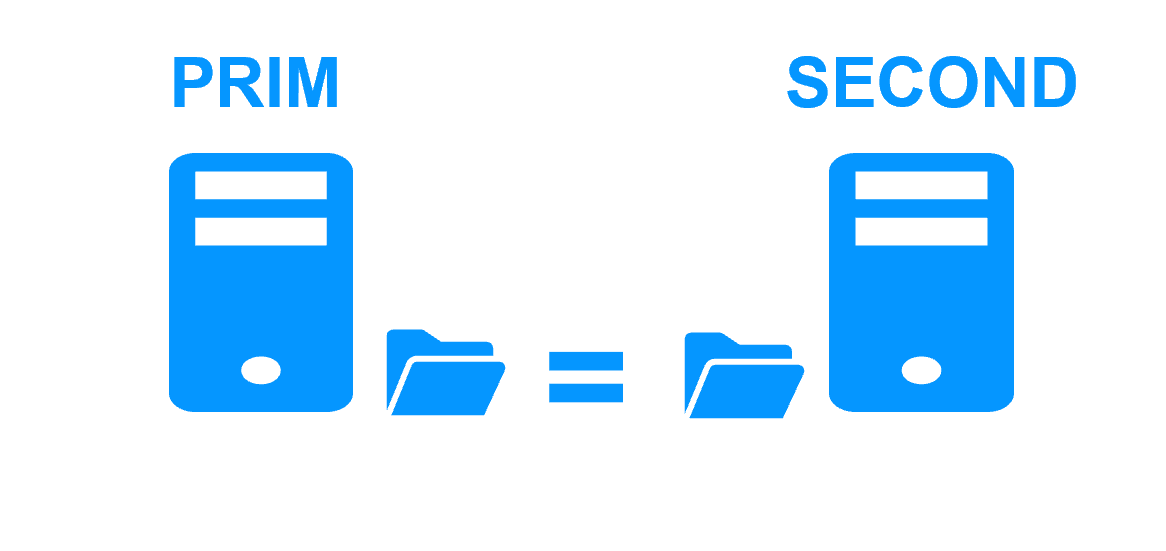

Evidian SafeKit mirror cluster with real-time file replication and failover |

|

|

3 products in 1 More info >  |

|

|

Very simple configuration More info >  |

|

|

Synchronous replication More info >  |

|

|

Fully automated failback More info >  |

|

|

Replication of any type of data More info >  |

|

|

File replication vs disk replication More info >  |

|

|

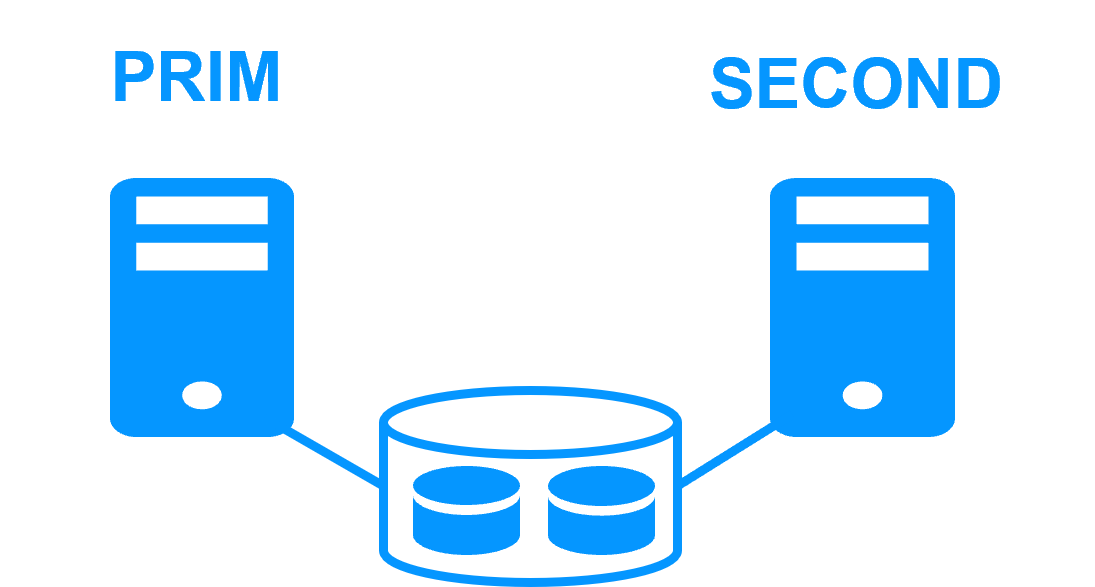

File replication vs shared disk More info >  |

|

|

Remote sites and virtual IP address More info >  |

|

|

Quorum and split brain More info >  |

|

|

Active/active cluster More info >  |

|

|

Uniform high availability solution More info >  |

|

|

RTO / RPO More info >  |

|

Evidian SafeKit farm cluster with load balancing and failover |

|

|

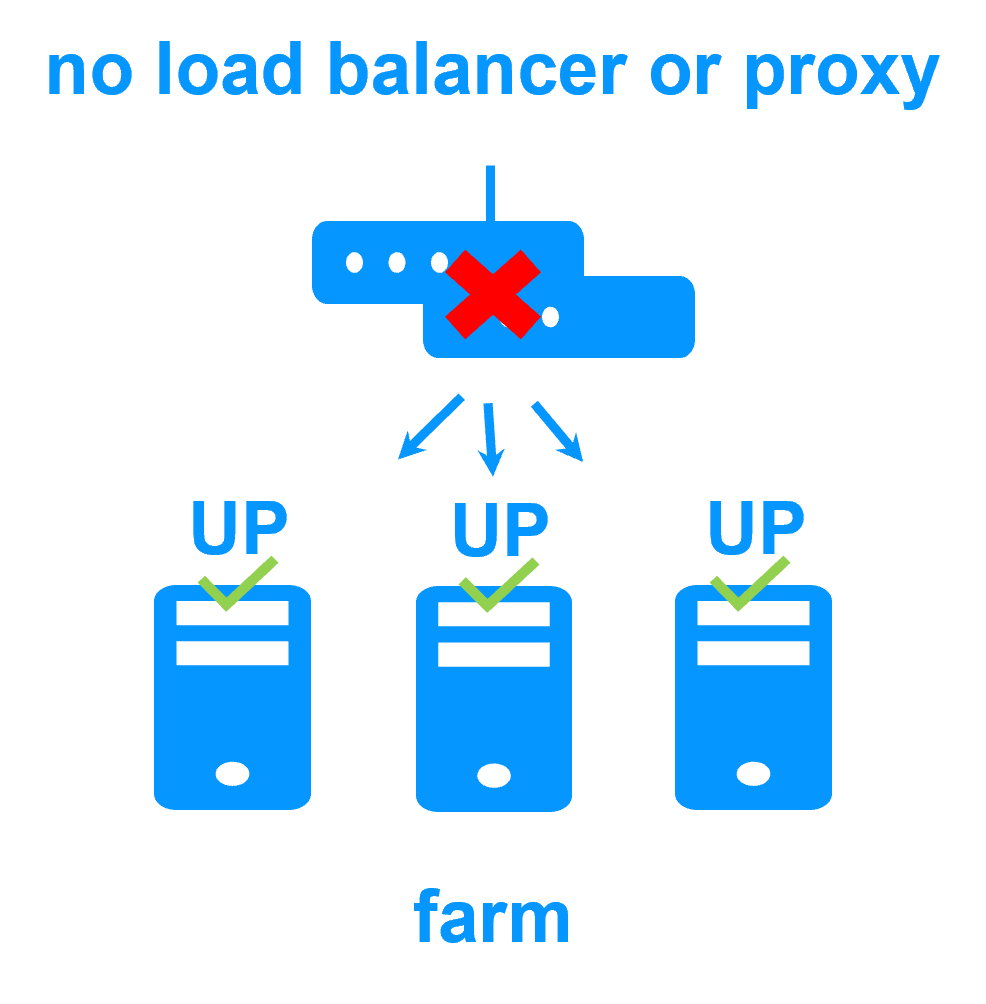

No load balancer or dedicated proxy servers or special multicast Ethernet address

|

|

|

All clustering features

|

|

|

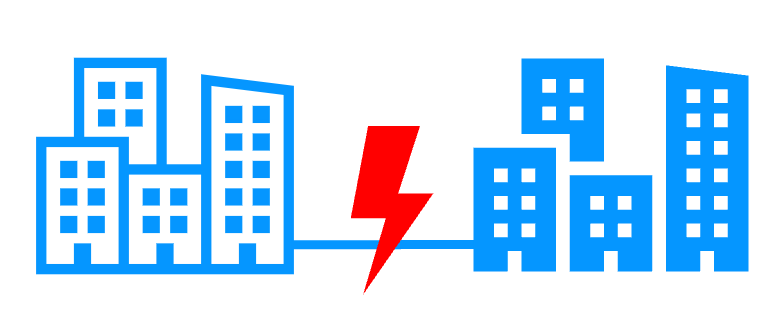

Remote sites and virtual IP address

|

|

|

Uniform high availability solution

|

|

Software clustering vs hardware clustering

|

|

|

|

Shared nothing vs a shared disk cluster |

|

|

|

Application High Availability vs Full Virtual Machine High Availability

|

|

|

|

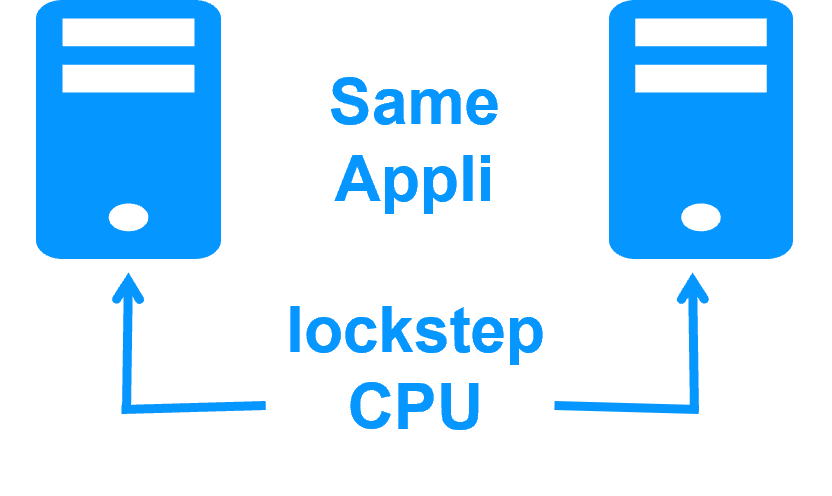

High availability vs fault tolerance

|

|

|

|

Synchronous replication vs asynchronous replication

|

|

|

|

Byte-level file replication vs block-level disk replication

|

|

|

|

Heartbeat, failover and quorum to avoid 2 master nodes

|

|

|

|

Virtual IP address primary/secondary, network load balancing, failover

|

|

|

|

Evidian SafeKit 8.2

All new features compared to SafeKit 7.5 described in the release notes

Packages

- Windows (with Microsoft Visual C++ Redistributable)

- Windows (without Microsoft Visual C++ Redistributable)

- Linux

- Supported OS and last fixes

One-month license key

Technical documentation

Training

Product information

Introduction

-

- Demonstration

- Examples of redundancy and high availability solution

- Evidian SafeKit sold in many different countries with Milestone

- 2 solutions: virtual machine or application cluster

- Distinctive advantages

- More information on the web site

-

- Cluster of virtual machines

- Mirror cluster

- Farm cluster

Installation, Console, CLI

- Install and setup / pptx

- Package installation

- Nodes setup

- Upgrade

- Web console / pptx

- Configuration of the cluster

- Configuration of a new module

- Advanced usage

- Securing the web console

- Command line / pptx

- Configure the SafeKit cluster

- Configure a SafeKit module

- Control and monitor

Advanced configuration

- Mirror module / pptx

- start_prim / stop_prim scripts

- userconfig.xml

- Heartbeat (<hearbeat>)

- Virtual IP address (<vip>)

- Real-time file replication (<rfs>)

- How real-time file replication works?

- Mirror's states in action

- Farm module / pptx

- start_both / stop_both scripts

- userconfig.xml

- Farm heartbeats (<farm>)

- Virtual IP address (<vip>)

- Farm's states in action

Troubleshooting

- Troubleshooting / pptx

- Analyze yourself the logs

- Take snapshots for support

- Boot / shutdown

- Web console / Command lines

- Mirror / Farm / Checkers

- Running an application without SafeKit

Support

- Evidian support / pptx

- Get permanent license key

- Register on support.evidian.com

- Call desk