What is a shared disk architecture?

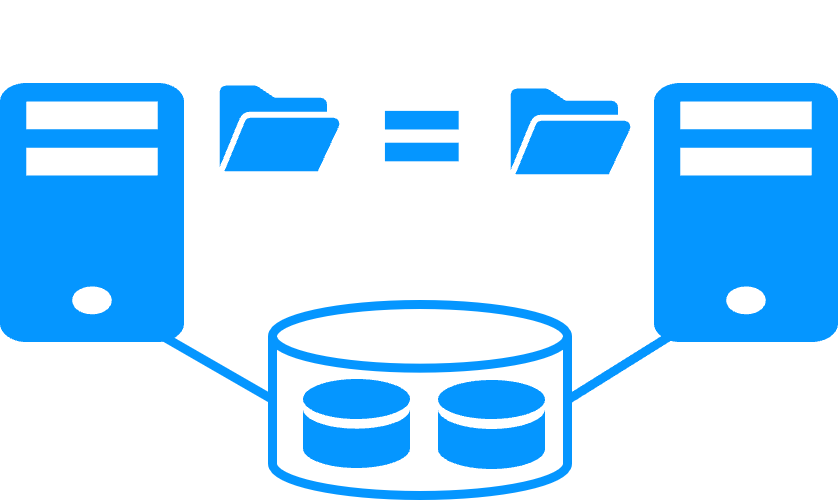

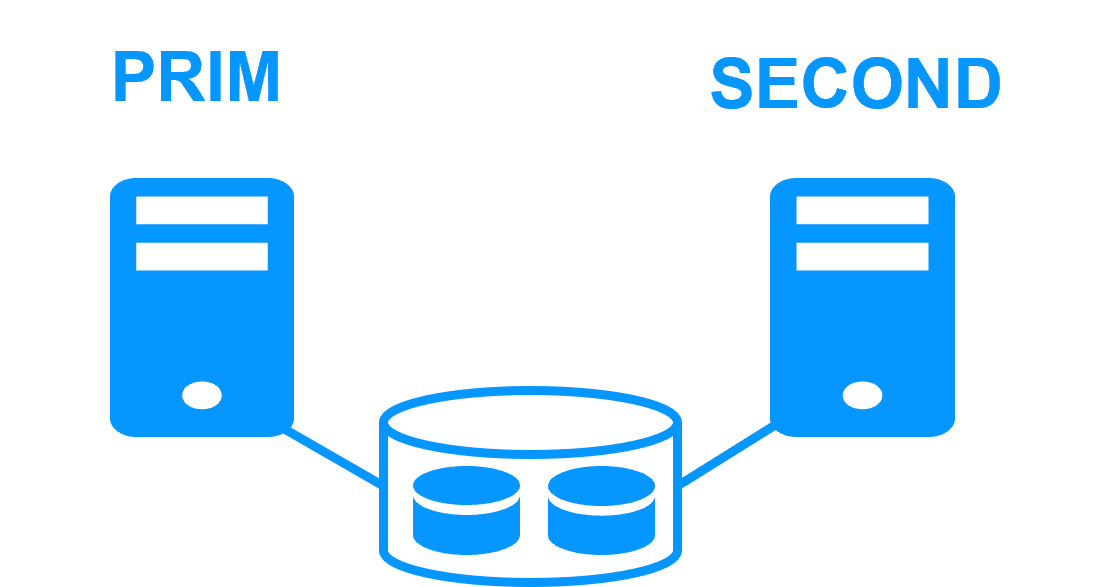

A shared disk architecture (like with Microsoft failover cluster) is based on 2 servers sharing a disk with an automatic application failover in case of hardware of software failures.

This architecture has hardware constraints: the specific external shared storage, the specific cards to install inside the servers, and the specific switches between the servers and the shared storage.

A shared disk architecture has a strong impact on the organization of application data. All application data must be localized in the shared disk for a restart after a failover.

Moreover, on failover, the file system recovery procedure must be executed on the shared disk. This increases the recovery time (RTO).

Finally, the solution is not easy to configure because skills are required to configure the specific hardware. Additionally, application skills are required to configure application data in the shared disk.